Made with ❤️ and GitHub Copilot

![]()

1 Introduction

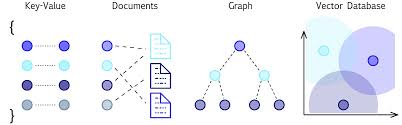

Vector databases store high-dimensional numerical vectors and enable fast similarity search. They are widely used in Retrieval-Augmented Generation (RAG) systems, recommendation engines, and computer vision applications.

This post covers the fundamentals of vector databases and a discussion of RAG. It provides a Python implementation using FAISS, a popular library for fast similarity search.

2 What is a Vector Database?

A vector database is optimized for storing and querying high-dimensional vectors efficiently. Unlike traditional databases that use structured queries, vector databases retrieve data using similarity measures like:

- Cosine Similarity (angle between vectors)

- Euclidean Distance (L2 norm)

- Inner Product (dot product)

2.1 Use Cases

- Retrieval-Augmented Generation (RAG): Enhancing LLM responses

- Recommendation Systems: Finding similar users or products

- Image & Video Search: Searching by content rather than metadata

3 What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is an AI framework that combines retrieval-based search with generative models to improve the quality and accuracy of text generation. Instead of relying only on a pre-trained language model’s internal knowledge, RAG dynamically fetches relevant information from external sources (like a database, vector store, or web search) to generate more informed and up-to-date responses.

How RAG Works

- Retrieval Step

- Given a query, the system retrieves relevant documents from an external knowledge base (e.g., a vector database like FAISS, Pinecone, or a search engine).

- Common retrieval methods include dense vector search (e.g., using embeddings from transformers like BERT or OpenAI embeddings) and keyword search.

- Augmentation Step

- The retrieved documents are provided as additional context to a large language model (LLM).

- This allows the model to generate responses based on both its pre-trained knowledge and real-time, external information.

- Generation Step

- The LLM synthesizes an answer, incorporating the retrieved knowledge while ensuring coherence and fluency.

Benefits of RAG

- More Accurate & Up-to-Date: Retrieves real-time or domain-specific knowledge, reducing hallucinations.

- Interpretable: Users can see the sources used for generating responses.

- Efficient: Allows smaller models to perform better by offloading factual knowledge to retrieval systems.

Use Cases

- Chatbots & Virtual Assistants: Improved customer support with company-specific knowledge.

- Enterprise Search: Querying internal documents dynamically.

- Medical & Legal AI: Ensuring responses are based on authoritative sources.

4 How Are Best Matches Found for a Query?

The best matches for a given query vector are found using nearest neighbor search (NNS) techniques. The most common method is k-nearest neighbors (k-NN), which identifies the top-k closest vectors in the database based on a similarity metric.

For large-scale search, approximate methods like Hierarchical Navigable Small World (HNSW) and Product Quantization (PQ) can be used to improve efficiency while maintaining accuracy.

4.1 k-NN in FAISS

FAISS provides both exact and approximate k-NN search. - Exact Search: Uses brute-force comparison for the most accurate results. - Approximate Search: Uses indexing structures like IVF (Inverted File Index) and HNSW for faster retrieval at scale.

5 Implementing a Simple Vector Database in Python

We’ll start by implementing a minimal in-memory vector database in Python before introducing FAISS for efficient retrieval.

5.1 Step 1: Stub Implementation

Below is a basic skeleton for a vector database.

from typing import List, Tuple, Optional

import numpy as np

class SimpleVectorDB:

def __init__(self, dim: int):

"""Initialize the vector database with a given dimensionality."""

self.dim = dim

self.vectors = [] # List of stored vectors

self.metadata = [] # Optional metadata for each vector

def add_vector(self, vector: np.ndarray, meta: Optional[dict] = None) -> int:

"""Add a new vector with optional metadata and return its index."""

self.vectors.append(vector)

self.metadata.append(meta)

return len(self.vectors) - 1

def search(self, query: np.ndarray, k: int = 5) -> List[Tuple[int, float]]:

"""Find the top-k closest vectors using cosine similarity."""

if not self.vectors:

return []

matrix = np.array(self.vectors)

similarities = matrix @ query / (np.linalg.norm(matrix, axis=1) * np.linalg.norm(query))

top_k = np.argsort(-similarities)[:k]

return [(i, similarities[i]) for i in top_k]

def get_vector(self, index: int) -> Optional[np.ndarray]:

"""Retrieve a vector by index."""

return self.vectors[index] if 0 <= index < len(self.vectors) else None5.2 Step 2: Using FAISS for Fast Search

FAISS (Facebook AI Similarity Search) is optimized for large-scale vector search.

import faiss

class FaissVectorDB:

def __init__(self, dim: int):

"""Initialize a FAISS-based vector database."""

self.index = faiss.IndexFlatL2(dim) # L2 distance index

self.vectors = []

def add_vector(self, vector: np.ndarray):

"""Add a new vector to the FAISS index."""

self.index.add(vector.reshape(1, -1))

self.vectors.append(vector)

def search(self, query: np.ndarray, k: int = 5):

"""Retrieve top-k closest vectors."""

distances, indices = self.index.search(query.reshape(1, -1), k)

return list(zip(indices[0], distances[0]))6 Conclusion

Vector databases are powerful tools for similarity search and information retrieval. This post introduced a simple implementation and an optimized approach using FAISS. Future improvements could include HNSW for approximate search or metadata storage.

Would you like a follow-up post on integrating FAISS with RAG? Let me know in the comments!